AWS CodeBuild is a fully managed service for continuous integration that compiles code, executes tests and generates artefacts ready to be deployed to your environments. Since it is a managed service there is no need to provision or operate servers as this is taken care of entirely by AWS and you pay only for what you use.

AWS CodePipeline is a continuous delivery service that manages application and infrastructure code releases in a rapid and consistent manner. CodePipeline stitches together source control stages with services like AWS CodeCommit or GitHub, build stages with AWS CodeBuild and code with AWS CodeDeploy or AWS CloudFormation. AWS CodePipeline is also a fully managed service, which means no server provisioning or operating, and you pay only for what you use.

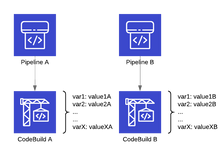

How Codepipeline and Codebuild work together

At a high level, CodePipeline acts as the orchestrator, lining up stages and passing artefacts as inputs and outputs between them. Artefacts are passed between stages with the help of an S3 bucket where the zip files are written and read from.